Neural Turing Machines

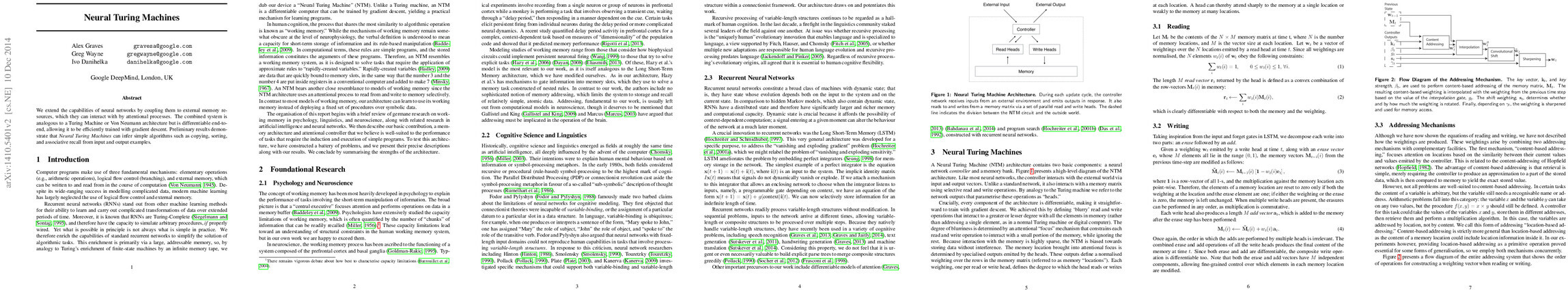

Abstract: We extend the capabilities of neural networks by coupling them to external memory resources, which they can interact with by attentional processes. The combined system is analogous to a Turing Machine or Von Neumann architecture but is differentiable end-to-end, allowing it to be efficiently trained with gradient descent. Preliminary results demonstrate that Neural Turing Machines can infer simple algorithms such as copying, sorting, and associative recall from input and output examples.

Synopsis

Overview

- Keywords: Neural Turing Machines, memory networks, algorithm learning, recurrent neural networks, attention mechanisms

- Objective: Introduce a novel neural network architecture that integrates external memory with neural networks to enhance their computational capabilities.

- Hypothesis: Neural Turing Machines can learn and execute simple algorithms through the interaction with an external memory, outperforming traditional recurrent neural networks (RNNs) in tasks requiring memory and algorithmic reasoning.

Background

Preliminary Theories:

- Working Memory: A cognitive system that temporarily holds and manipulates information, crucial for tasks requiring short-term data processing.

- Recurrent Neural Networks (RNNs): A class of neural networks designed for sequential data, capable of maintaining state information over time, but often struggle with long-term dependencies.

- Turing Completeness: The ability of a computational system to simulate any Turing machine, implying that RNNs can theoretically perform any computation if properly structured.

- Attention Mechanisms: Techniques that allow models to focus on specific parts of the input data, enhancing the ability to process sequences by selectively reading from memory.

Prior Research:

- 1995: RNNs demonstrated Turing completeness, indicating their potential for complex computations.

- 1997: Introduction of Long Short-Term Memory (LSTM) networks, addressing the vanishing gradient problem in RNNs, allowing better retention of information over longer sequences.

- 2013: Development of differentiable attention models, which laid the groundwork for integrating attention with memory in neural architectures.

Methodology

Key Ideas:

- Architecture: The NTM consists of a neural network controller and an external memory matrix, allowing for dynamic read and write operations.

- Differentiable Operations: The read and write mechanisms are designed to be differentiable, enabling the use of gradient descent for training.

- Content and Location Addressing: The NTM employs both content-based and location-based addressing to access memory, enhancing its ability to manipulate stored data.

Experiments:

- Copy Task: Evaluated the NTM's ability to store and recall sequences of binary vectors, demonstrating superior performance over LSTM in generalizing to longer sequences.

- Repeat Copy Task: Tested the NTM's capability to output a copied sequence multiple times, simulating a "for loop" behavior.

- Associative Recall: Assessed the NTM's ability to retrieve subsequent items from a list based on a query, showcasing its handling of more complex data structures.

- Priority Sort: Investigated the NTM's sorting capabilities, requiring it to sort binary vectors based on associated priority ratings.

Implications: The design of the NTM allows for enhanced memory manipulation and algorithmic execution, providing a framework for future research in neural architectures that require memory and reasoning.

Findings

Outcomes:

- NTMs significantly outperformed LSTMs in tasks requiring long-term memory and algorithmic processing.

- The architecture demonstrated the ability to generalize well beyond training data, successfully executing tasks with longer sequences than it was trained on.

- NTMs learned compact internal programs for tasks, indicating a form of algorithmic learning.

Significance: The research presents a substantial advancement over traditional RNNs and LSTMs, highlighting the importance of external memory in neural computations and challenging existing beliefs about the limitations of neural networks in algorithmic tasks.

Future Work: Further exploration of more complex algorithms, integration with other neural architectures, and applications in real-world tasks requiring memory and reasoning.

Potential Impact: Advancements in NTM research could lead to significant improvements in areas such as natural language processing, robotics, and cognitive computing, where memory and algorithmic reasoning are critical.