Two-Stream Convolutional Networks for Action Recognition in Videos

Abstract: We investigate architectures of discriminatively trained deep Convolutional Networks (ConvNets) for action recognition in video. The challenge is to capture the complementary information on appearance from still frames and motion between frames. We also aim to generalise the best performing hand-crafted features within a data-driven learning framework. Our contribution is three-fold. First, we propose a two-stream ConvNet architecture which incorporates spatial and temporal networks. Second, we demonstrate that a ConvNet trained on multi-frame dense optical flow is able to achieve very good performance in spite of limited training data. Finally, we show that multi-task learning, applied to two different action classification datasets, can be used to increase the amount of training data and improve the performance on both. Our architecture is trained and evaluated on the standard video actions benchmarks of UCF-101 and HMDB-51, where it is competitive with the state of the art. It also exceeds by a large margin previous attempts to use deep nets for video classification.

Synopsis

Overview

- Keywords: Action recognition, two-stream ConvNets, optical flow, multi-task learning, video classification

- Objective: Investigate architectures of deep Convolutional Networks for action recognition in videos by capturing spatial and temporal information.

- Hypothesis: The integration of spatial and temporal networks in a two-stream architecture will enhance action recognition performance compared to traditional methods.

- Innovation: Introduction of a two-stream ConvNet architecture that separately processes spatial and temporal information, leveraging optical flow for motion recognition.

Background

Preliminary Theories:

- Two-Stream Hypothesis: Suggests that the human visual system processes visual information through two pathways: one for object recognition (ventral stream) and another for motion recognition (dorsal stream).

- Optical Flow: A technique to estimate motion between frames, providing essential information for understanding dynamic scenes.

- Convolutional Neural Networks (ConvNets): Deep learning models that excel in image classification tasks, adapted here for video action recognition.

Prior Research:

- 2011: Introduction of dense trajectories for action recognition, which improved performance over sparse feature extraction methods.

- 2013: Development of improved dense trajectories (IDT) that utilized higher-dimensional encodings for better accuracy.

- 2014: Research comparing various ConvNet architectures for action recognition, highlighting the challenges of learning spatio-temporal features from raw video frames.

Methodology

Key Ideas:

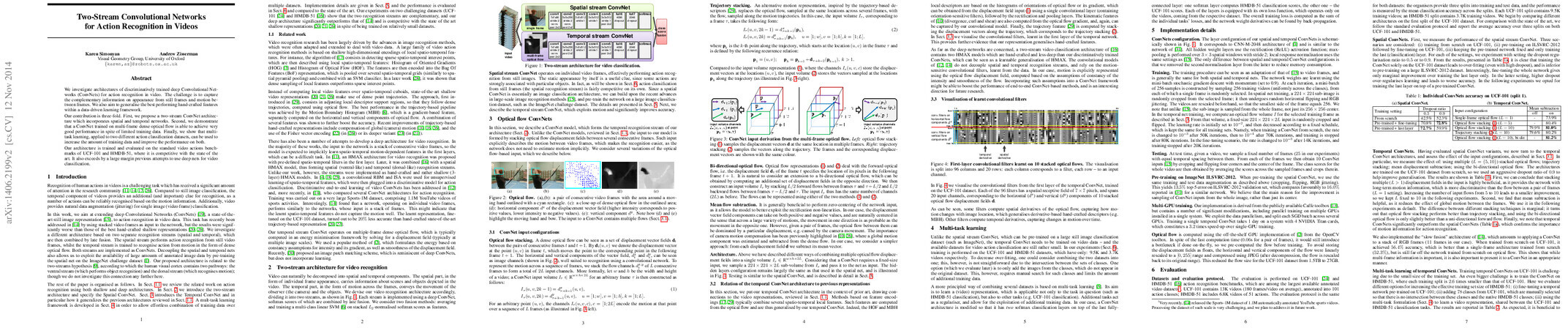

- Two-Stream Architecture: Consists of a spatial stream (processing still frames) and a temporal stream (processing optical flow), combined via late fusion.

- Optical Flow ConvNets: The temporal stream utilizes stacked optical flow fields as input, explicitly capturing motion information.

- Multi-Task Learning: A framework that allows simultaneous training on multiple datasets (UCF-101 and HMDB-51) to enhance model generalization and performance.

Experiments:

- Evaluation on UCF-101 and HMDB-51 datasets, measuring accuracy through various fusion methods (averaging and SVM).

- Ablation studies to assess the impact of different input configurations for the temporal ConvNet, including the number of stacked optical flows and mean displacement subtraction.

Implications: The design allows for effective utilization of both spatial and temporal features, improving action recognition capabilities in videos.

Findings

Outcomes:

- The two-stream model achieved significant improvements in accuracy over single-stream approaches, with the best performance observed when using SVM for score fusion.

- The temporal ConvNet outperformed spatial ConvNet alone, emphasizing the importance of motion information in action recognition.

- Multi-task learning provided additional benefits, leading to better generalization across datasets.

Significance: The research demonstrates that decoupling spatial and temporal processing in ConvNets leads to superior performance compared to previous deep learning approaches that did not explicitly separate these features.

Future Work: Exploration of more sophisticated pooling techniques over spatio-temporal tubes and improved handling of camera motion could further enhance model performance.

Potential Impact: Advancements in this area could lead to more robust action recognition systems applicable in various domains, including surveillance, human-computer interaction, and sports analytics.