LLaMA: Open and Efficient Foundation Language Models

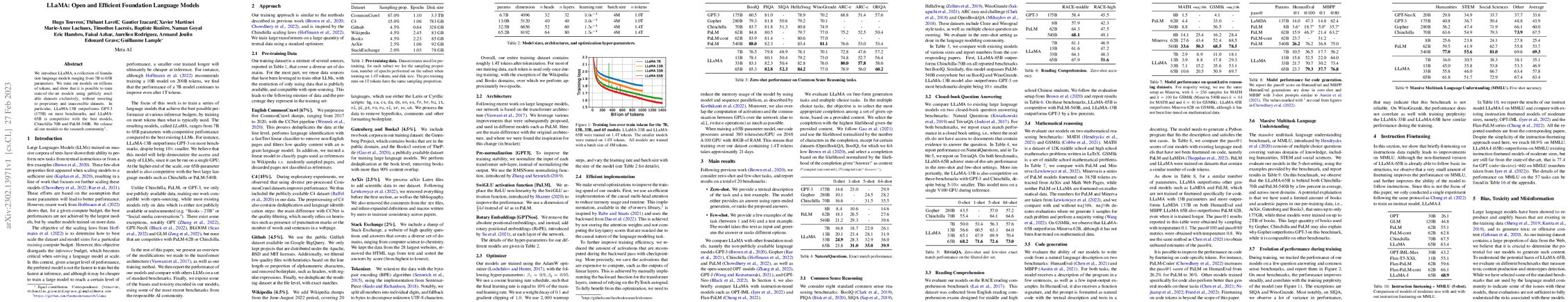

Abstract: We introduce LLaMA, a collection of foundation language models ranging from 7B to 65B parameters. We train our models on trillions of tokens, and show that it is possible to train state-of-the-art models using publicly available datasets exclusively, without resorting to proprietary and inaccessible datasets. In particular, LLaMA-13B outperforms GPT-3 (175B) on most benchmarks, and LLaMA-65B is competitive with the best models, Chinchilla-70B and PaLM-540B. We release all our models to the research community.

Synopsis

Overview

- Keywords: Language Models, LLaMA, Open Source, Efficiency, Foundation Models

- Objective: Develop a series of foundation language models (LLaMA) that achieve competitive performance using only publicly available datasets.

- Hypothesis: Smaller models trained on larger datasets can outperform larger models trained on less data within specific inference budgets.

- Innovation: Introduction of LLaMA models ranging from 7B to 65B parameters, achieving state-of-the-art performance while being open-sourced and trained exclusively on publicly available data.

Background

Preliminary Theories:

- Scaling Laws: The research builds on the idea that model performance improves with increased data and model size, but highlights that optimal performance can be achieved with smaller models trained on more data.

- Transformer Architecture: The models are based on the transformer architecture, which has become the standard for natural language processing tasks.

- Few-Shot Learning: The ability of models to generalize from a few examples is a key aspect of their design and evaluation.

- Open Data Utilization: Emphasizes the importance of using publicly available datasets to ensure transparency and accessibility in AI research.

Prior Research:

- GPT-3 (2020): Demonstrated the effectiveness of large language models with 175 billion parameters, setting a benchmark for subsequent models.

- Chinchilla (2022): Introduced scaling laws that suggest smaller models trained on more data can outperform larger models, influencing the design of LLaMA.

- PaLM (2022): Showcased advancements in model size and performance, prompting further exploration of efficiency in model training and deployment.

- OPT and BLOOM (2022): Open-source models that contributed to the discourse on transparency and accessibility in AI.

Methodology

Key Ideas:

- Data Utilization: Trained on a diverse mixture of publicly available datasets, including CommonCrawl, C4, GitHub, and Wikipedia, ensuring compliance with open-source principles.

- Model Scaling: Implemented models ranging from 7B to 65B parameters, optimizing for both training efficiency and inference performance.

- Training Techniques: Utilized advanced techniques such as model and sequence parallelism to enhance training speed and reduce memory usage.

Experiments:

- Benchmarks: Evaluated across multiple benchmarks including Natural Questions, TriviaQA, and various common sense reasoning tasks.

- Performance Metrics: Employed zero-shot and few-shot settings to assess model capabilities, reporting metrics such as exact match performance and pass@k scores for code generation tasks.

Implications: The methodology allows for efficient training and deployment of language models, making advanced AI accessible to researchers with limited computational resources.

Findings

Outcomes:

- LLaMA-13B outperformed GPT-3 on most benchmarks despite being over 10× smaller.

- LLaMA-65B demonstrated competitive performance against larger models like Chinchilla and PaLM, showcasing the effectiveness of the scaling approach.

- The models exhibited strong performance in closed-book question answering and reading comprehension tasks.

Significance: The findings challenge the prevailing belief that larger models are inherently better, emphasizing the potential of smaller, well-trained models to achieve superior performance.

Future Work: Plans to explore further fine-tuning on specific tasks, expand model sizes, and enhance training datasets to improve robustness and reduce biases.

Potential Impact: Advancements in model efficiency and accessibility could democratize AI research, enabling broader participation and innovation in the field of natural language processing.