A Neural Algorithm of Artistic Style

Abstract: In fine art, especially painting, humans have mastered the skill to create unique visual experiences through composing a complex interplay between the content and style of an image. Thus far the algorithmic basis of this process is unknown and there exists no artificial system with similar capabilities. However, in other key areas of visual perception such as object and face recognition near-human performance was recently demonstrated by a class of biologically inspired vision models called Deep Neural Networks. Here we introduce an artificial system based on a Deep Neural Network that creates artistic images of high perceptual quality. The system uses neural representations to separate and recombine content and style of arbitrary images, providing a neural algorithm for the creation of artistic images. Moreover, in light of the striking similarities between performance-optimised artificial neural networks and biological vision, our work offers a path forward to an algorithmic understanding of how humans create and perceive artistic imagery.

Synopsis

Overview

- Keywords: Neural networks, artistic style transfer, convolutional neural networks, content representation, style representation

- Objective: Develop a neural algorithm that separates and recombines content and style from images to create artistic renditions.

- Hypothesis: The representations of content and style in convolutional neural networks can be manipulated independently to synthesize new images.

Background

Preliminary Theories:

- Convolutional Neural Networks (CNNs): A class of deep learning models designed for processing structured grid data like images, consisting of layers that extract features hierarchically.

- Style Transfer: A technique in computer vision that involves applying the visual appearance (style) of one image to the content of another.

- Texture Transfer: Methods that focus on transferring texture patterns from one image to another, often using non-parametric techniques.

- Feature Representation: The concept that neural networks can represent images through various layers, capturing different levels of abstraction.

Prior Research:

- 2000: Tenenbaum and Freeman proposed bilinear models for separating style and content.

- 2004: Elgammal and Lee introduced methods for separating style and content on nonlinear manifolds.

- 2015: The introduction of deep learning models like VGG-Networks that achieved near-human performance in object recognition, providing a foundation for style transfer applications.

Methodology

Key Ideas:

- Content Representation: Utilizes higher layers of CNNs to capture the high-level content of images, focusing on object identity rather than pixel values.

- Style Representation: Constructs a multi-scale representation of an image's style by calculating correlations between feature maps across different layers, represented by the Gram matrix.

- Loss Function: A combined loss function is minimized, balancing content and style losses to generate images that reflect both attributes.

Experiments:

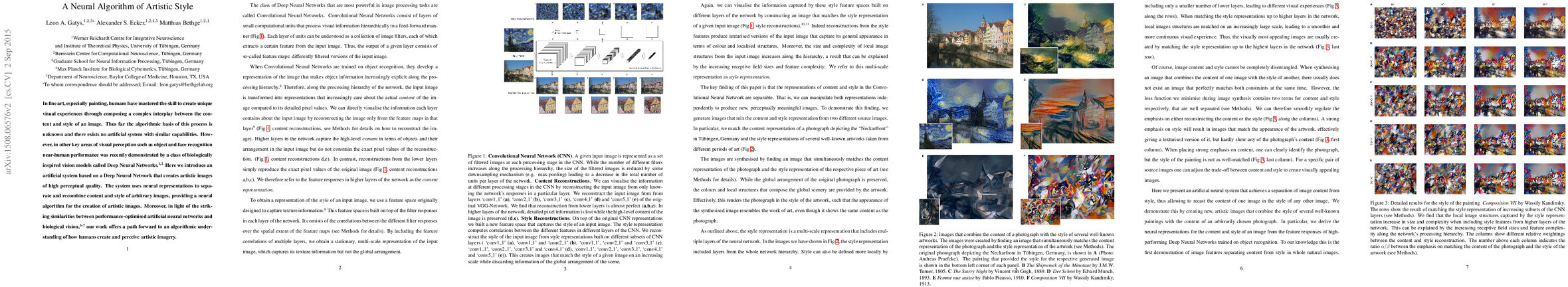

- Image Synthesis: The algorithm synthesizes images by matching the content representation of a photograph with the style representation of various artworks.

- Ablation Studies: Investigates the impact of different layers on style representation and the effect of varying the emphasis on content versus style.

Implications: The methodology allows for the exploration of visual perception and the neural representation of art, providing insights into how humans perceive and create artistic imagery.

Findings

Outcomes:

- Successful separation of content and style, enabling the creation of visually appealing images that blend elements from different sources.

- Demonstrated that higher layers of CNNs capture more complex style features, leading to smoother and more aesthetically pleasing results.

- The ability to adjust the balance between content and style allows for a range of artistic expressions.

Significance: This research advances the understanding of how neural networks can be utilized for artistic applications, challenging previous beliefs that style and content could not be effectively disentangled.

Future Work: Potential research directions include refining the algorithm for real-time applications, exploring additional styles, and applying the methodology to video content.

Potential Impact: Advancements in artistic style transfer could revolutionize fields such as digital art, gaming, and virtual reality, enhancing creative tools and user experiences.