Teaching Machines to Read and Comprehend

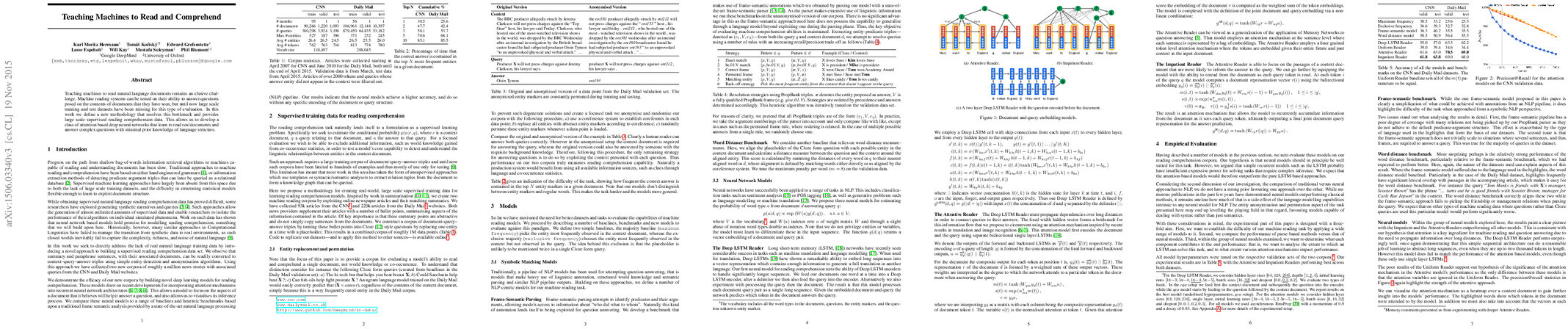

Abstract: Teaching machines to read natural language documents remains an elusive challenge. Machine reading systems can be tested on their ability to answer questions posed on the contents of documents that they have seen, but until now large scale training and test datasets have been missing for this type of evaluation. In this work we define a new methodology that resolves this bottleneck and provides large scale supervised reading comprehension data. This allows us to develop a class of attention based deep neural networks that learn to read real documents and answer complex questions with minimal prior knowledge of language structure.

Synopsis

Overview

- Keywords: Machine Reading, Comprehension, Neural Networks, Attention Mechanisms, Natural Language Processing

- Objective: Develop a large-scale supervised reading comprehension dataset and demonstrate the effectiveness of attention-based neural networks for answering questions based on document content.

- Hypothesis: Neural models, particularly those utilizing attention mechanisms, will outperform traditional models in reading comprehension tasks.

- Innovation: Introduction of a novel methodology for creating large-scale supervised datasets from real-world documents, enabling the training of deep learning models for reading comprehension.

Background

Preliminary Theories:

- Machine Reading: The process of enabling machines to read and understand human language, traditionally reliant on rule-based systems or information extraction.

- Attention Mechanisms: Techniques in neural networks that allow models to focus on specific parts of the input data, improving performance on tasks requiring long-range dependencies.

- Cloze Tasks: A method of assessing comprehension by asking models to fill in missing words or entities in a sentence based on context.

- Entity Recognition: The identification and classification of entities in text, crucial for understanding relationships and context in natural language.

Prior Research:

- 2010: Development of early machine reading systems that utilized rule-based approaches and limited datasets.

- 2014: Introduction of memory networks that incorporated attention mechanisms for improved question answering.

- 2015: Emergence of deep learning models in NLP, demonstrating significant improvements in various tasks, including reading comprehension.

Methodology

Key Ideas:

- Dataset Creation: Utilization of online news articles and their summaries to create document-query-answer triples, resulting in approximately one million data points.

- Neural Network Architectures: Implementation of attention-based models, including the Attentive Reader and Impatient Reader, which process documents and queries to predict answers.

- Entity Anonymization: Replacement of entities in the dataset with abstract markers to focus the model on context rather than external knowledge.

Experiments:

- Model Evaluation: Comparison of various neural models against traditional benchmarks, including frame-semantic parsing and word distance models.

- Performance Metrics: Accuracy of models on validation and test sets derived from the CNN and Daily Mail datasets, focusing on the ability to correctly identify answers based on context.

Implications: The design of the methodology emphasizes the importance of large-scale, real-world datasets for training effective reading comprehension models, moving beyond synthetic data limitations.

Findings

Outcomes:

- Attention-based models (Attentive and Impatient Readers) significantly outperformed traditional models, demonstrating the effectiveness of attention mechanisms in processing complex queries.

- The Deep LSTM Reader showed competitive performance but was outmatched by attention-based architectures, highlighting the limitations of purely sequential models.

- The performance of models varied with document length, indicating that longer contexts posed challenges but could be managed with effective attention strategies.

Significance: This research challenges previous beliefs that traditional NLP methods could adequately handle reading comprehension tasks, showcasing the superiority of neural approaches with attention mechanisms.

Future Work: Exploration of incorporating world knowledge and multi-document queries into models, as well as developing more complex attention mechanisms to handle intricate inference tasks.

Potential Impact: Advancements in reading comprehension models could lead to significant improvements in applications such as automated question answering, summarization, and interactive AI systems capable of understanding and responding to human language more effectively.