OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks

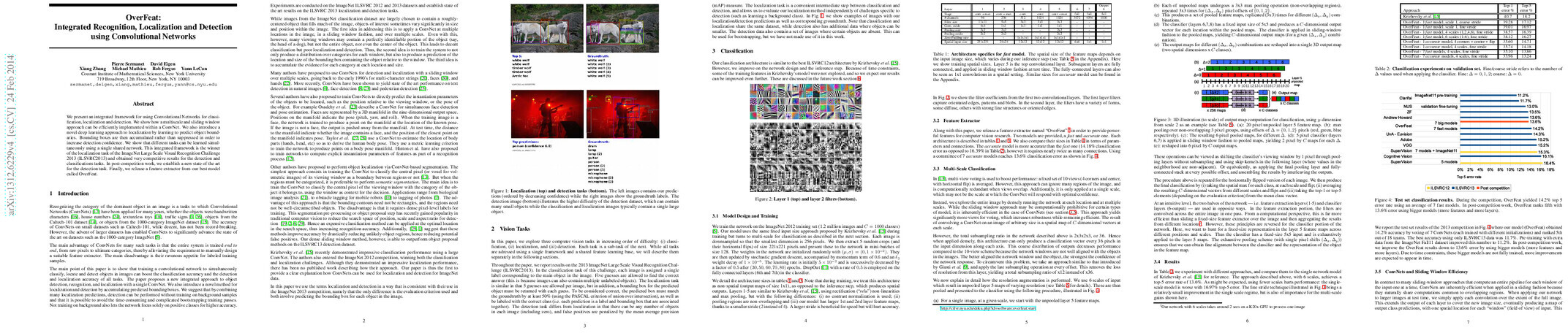

Abstract: We present an integrated framework for using Convolutional Networks for classification, localization and detection. We show how a multiscale and sliding window approach can be efficiently implemented within a ConvNet. We also introduce a novel deep learning approach to localization by learning to predict object boundaries. Bounding boxes are then accumulated rather than suppressed in order to increase detection confidence. We show that different tasks can be learned simultaneously using a single shared network. This integrated framework is the winner of the localization task of the ImageNet Large Scale Visual Recognition Challenge 2013 (ILSVRC2013) and obtained very competitive results for the detection and classifications tasks. In post-competition work, we establish a new state of the art for the detection task. Finally, we release a feature extractor from our best model called OverFeat.

Synopsis

Overview

- Keywords: Convolutional Networks, Object Recognition, Localization, Detection, ImageNet

- Objective: Present an integrated framework for using Convolutional Networks for classification, localization, and detection.

- Hypothesis: Training a convolutional network to simultaneously classify, locate, and detect objects can enhance the accuracy of all tasks.

- Innovation: Introduction of a novel deep learning approach for localization by predicting object boundaries and accumulating bounding boxes to improve detection confidence.

Background

Preliminary Theories:

- Convolutional Networks (ConvNets): Neural networks designed to process data with a grid-like topology, particularly effective for image data.

- Sliding Window Approach: A technique used in object detection where a window slides over the image to detect objects at various locations and scales.

- Multi-Scale Detection: The practice of applying detection algorithms at multiple scales to accommodate objects of varying sizes.

- Bounding Box Regression: A method to predict the location of objects by learning to output coordinates for bounding boxes.

Prior Research:

- 1990s: Early applications of sliding window techniques for character and face detection.

- 2012: Krizhevsky et al. demonstrated significant advancements in image classification using ConvNets, winning the ImageNet competition.

- 2013: Various authors explored ConvNets for detection and localization, achieving state-of-the-art results in tasks like text and pedestrian detection.

Methodology

Key Ideas:

- Multi-Scale and Sliding Window Implementation: Efficiently applying ConvNets across multiple scales and locations in an image.

- Simultaneous Learning: Training a single network to perform classification, localization, and detection tasks concurrently.

- Accumulation of Bounding Boxes: Instead of suppressing overlapping boxes, the method accumulates predictions to enhance detection confidence.

Experiments:

- Conducted on the ImageNet ILSVRC 2012 and 2013 datasets.

- Utilized metrics such as mean average precision (mAP) for detection and localization accuracy.

- Explored various configurations of scales and regression techniques to optimize performance.

Implications: The design allows for efficient computation and improved accuracy across multiple vision tasks, showcasing the versatility of ConvNets.

Findings

Outcomes:

- Achieved first place in the localization task of ILSVRC 2013 with a 29.9% error rate.

- Established a new state of the art for detection with a mean average precision of 24.3%.

- Demonstrated that using a single shared network for multiple tasks can enhance overall performance.

Significance: This research advances the understanding of how ConvNets can be adapted for complex tasks beyond simple classification, challenging previous beliefs about the necessity of separate models for different tasks.

Future Work: Suggested improvements include back-propagating through the entire network for localization, optimizing the loss function to directly target intersection-over-union (IOU), and exploring alternative bounding box parameterizations.

Potential Impact: Pursuing these avenues could lead to even more robust and efficient models for real-time object detection and localization in various applications, including autonomous vehicles and surveillance systems.