Tree of Thoughts: Deliberate Problem Solving with Large Language Models

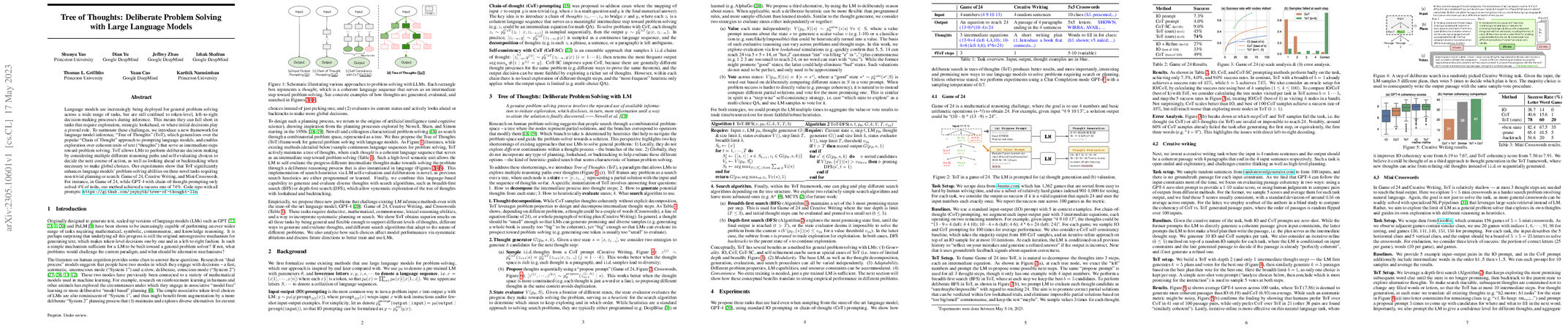

Abstract: Language models are increasingly being deployed for general problem solving across a wide range of tasks, but are still confined to token-level, left-to-right decision-making processes during inference. This means they can fall short in tasks that require exploration, strategic lookahead, or where initial decisions play a pivotal role. To surmount these challenges, we introduce a new framework for language model inference, Tree of Thoughts (ToT), which generalizes over the popular Chain of Thought approach to prompting language models, and enables exploration over coherent units of text (thoughts) that serve as intermediate steps toward problem solving. ToT allows LMs to perform deliberate decision making by considering multiple different reasoning paths and self-evaluating choices to decide the next course of action, as well as looking ahead or backtracking when necessary to make global choices. Our experiments show that ToT significantly enhances language models' problem-solving abilities on three novel tasks requiring non-trivial planning or search: Game of 24, Creative Writing, and Mini Crosswords. For instance, in Game of 24, while GPT-4 with chain-of-thought prompting only solved 4% of tasks, our method achieved a success rate of 74%. Code repo with all prompts: https://github.com/princeton-nlp/tree-of-thought-llm.

Synopsis

Overview

- Keywords: Large Language Models, Problem Solving, Tree of Thoughts, Deliberate Decision Making, Chain of Thought

- Objective: Introduce the Tree of Thoughts (ToT) framework to enhance problem-solving capabilities of large language models through deliberate decision-making processes.

- Hypothesis: Can a tree-based exploration of thoughts improve the problem-solving performance of language models compared to traditional methods?

- Innovation: The ToT framework allows for exploration of multiple reasoning paths and self-evaluation, enabling backtracking and lookahead capabilities that traditional methods lack.

Background

Preliminary Theories:

- Dual Process Theory: Proposes two modes of thinking: a fast, intuitive system (System 1) and a slow, deliberate system (System 2). This theory suggests that language models may benefit from a more structured, deliberative approach.

- Search Algorithms: Classical search methods like breadth-first search (BFS) and depth-first search (DFS) provide foundational strategies for navigating problem spaces, which ToT adapts for language models.

- Chain of Thought (CoT): A prompting method that encourages models to generate intermediate reasoning steps, but lacks the ability to explore multiple paths or backtrack effectively.

- Self-Reflection in LMs: Recent advancements in using LMs to evaluate their own outputs and decisions, enhancing the decision-making process.

Prior Research:

- 2020: Introduction of Chain of Thought prompting, which improved reasoning in LMs by generating intermediate steps.

- 2022: Development of self-consistency methods that sample multiple reasoning paths to improve output reliability.

- 2023: Emergence of program-guided LLM generation, integrating symbolic reasoning with language models to enhance problem-solving.

Methodology

Key Ideas:

- Thought Decomposition: Breaking down problems into manageable thought steps that can be evaluated and explored.

- Thought Generation: Utilizing two strategies for generating thoughts: sampling independent thoughts or proposing thoughts sequentially based on context.

- State Evaluation: Employing the language model to assess the potential success of different states in the problem-solving process, providing a heuristic for search algorithms.

- Search Algorithms: Implementing BFS and DFS to navigate the tree of thoughts, allowing for systematic exploration and backtracking.

Experiments:

- Game of 24: A mathematical challenge where the model must use four numbers to reach 24 through arithmetic operations. ToT significantly outperformed traditional methods.

- Creative Writing: Evaluating the model's ability to generate coherent passages based on random sentences, with ToT showing improved coherence.

- Mini Crosswords: A lexical reasoning task where the model fills in crossword clues, demonstrating the effectiveness of ToT in handling complex language tasks.

Implications: The ToT framework provides a flexible and adaptable approach to problem-solving, allowing for the integration of various search algorithms and thought processes tailored to specific tasks.

Findings

Outcomes:

- ToT achieved a success rate of 74% in the Game of 24, compared to only 4% with traditional Chain of Thought prompting.

- In creative writing tasks, ToT-generated passages were rated more coherent than those produced by standard methods.

- Mini Crosswords demonstrated a word-level success rate of 60% with ToT, significantly higher than traditional methods.

Significance: The research highlights the limitations of existing prompting methods and showcases the advantages of a structured, tree-based approach to reasoning in language models.

Future Work: Exploration of more complex tasks that require advanced reasoning and decision-making, as well as fine-tuning language models to enhance their problem-solving capabilities further.

Potential Impact: If further developed, the ToT framework could revolutionize how language models are applied in real-world scenarios, improving their effectiveness in decision-making and problem-solving across various domains.