GPT-4 Technical Report

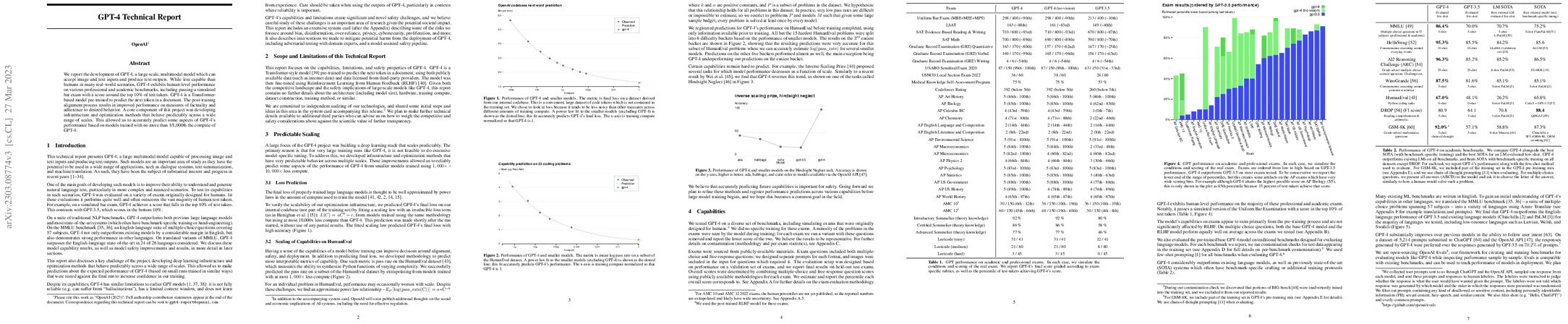

Abstract: We report the development of GPT-4, a large-scale, multimodal model which can accept image and text inputs and produce text outputs. While less capable than humans in many real-world scenarios, GPT-4 exhibits human-level performance on various professional and academic benchmarks, including passing a simulated bar exam with a score around the top 10% of test takers. GPT-4 is a Transformer-based model pre-trained to predict the next token in a document. The post-training alignment process results in improved performance on measures of factuality and adherence to desired behavior. A core component of this project was developing infrastructure and optimization methods that behave predictably across a wide range of scales. This allowed us to accurately predict some aspects of GPT-4's performance based on models trained with no more than 1/1,000th the compute of GPT-4.

Synopsis

Overview

- Keywords: GPT-4, large language model, multimodal, safety, performance, reinforcement learning

- Objective: To present the capabilities, limitations, and safety properties of GPT-4, a large multimodal model.

- Hypothesis: GPT-4 will demonstrate improved performance and safety features compared to its predecessors while still exhibiting certain limitations.

- Innovation: Introduction of a multimodal model capable of processing both text and image inputs, along with significant advancements in safety metrics and performance across various benchmarks.

Background

Preliminary Theories:

- Transformer Architecture: A foundational model architecture that enables efficient processing of sequential data, crucial for language models.

- Reinforcement Learning from Human Feedback (RLHF): A method used to fine-tune models based on human preferences, enhancing alignment with user expectations.

- Scaling Laws in Deep Learning: Theoretical frameworks that describe how model performance scales with the amount of training data and computational resources.

- Hallucination in Language Models: The phenomenon where models generate plausible but incorrect or nonsensical information, posing risks in practical applications.

Prior Research:

- GPT-3: Established benchmarks for language generation and comprehension, serving as a baseline for subsequent models.

- BERT: Introduced bidirectional context in language understanding, influencing subsequent architectures including GPT.

- T5 (Text-to-Text Transfer Transformer): Demonstrated the versatility of text-based tasks, paving the way for multimodal approaches.

- GPT-3.5: Improved upon GPT-3 with better fine-tuning and safety measures, setting the stage for GPT-4's advancements.

Methodology

Key Ideas:

- Multimodal Input Processing: GPT-4 can handle both text and image inputs, expanding its applicability.

- Predictable Scaling Infrastructure: Development of systems that allow for accurate predictions of model performance based on smaller-scale runs.

- Adversarial Testing: Engaging domain experts to probe the model for vulnerabilities and safety risks, enhancing robustness.

- Model-Assisted Safety Pipeline: A framework for monitoring and mitigating harmful outputs during deployment.

Experiments:

- Benchmark Evaluations: Performance on traditional NLP benchmarks, including MMLU, where GPT-4 outperformed previous models significantly.

- Safety Metrics Assessment: Evaluations on the model's tendency to generate harmful or biased content, showing substantial improvements over GPT-3.5.

- Real-World Task Simulations: Testing the model's capabilities in practical scenarios, such as coding and reasoning tasks.

Implications: The design of GPT-4's methodology emphasizes the importance of safety and reliability in AI applications, addressing potential misuse while enhancing user experience.

Findings

Outcomes:

- Performance Improvements: GPT-4 achieved human-level performance on various professional and academic benchmarks, including a simulated bar exam.

- Reduced Hallucinations: The model demonstrated a significant decrease in the generation of nonsensical or false information compared to prior versions.

- Multilingual Capabilities: Strong performance across multiple languages, surpassing state-of-the-art benchmarks in 24 out of 26 languages.

- Safety Enhancements: Improved adherence to content policies, with a marked reduction in the generation of toxic or harmful outputs.

Significance: GPT-4's advancements reflect a substantial leap in the capabilities of language models, addressing both performance and safety concerns more effectively than earlier iterations.

Future Work: Continued exploration of safety measures, including the development of more robust classifiers and ongoing assessments of model behavior in diverse contexts.

Potential Impact: If pursued, future research could lead to safer and more reliable AI systems, fostering greater public trust and wider adoption across various sectors.