Neural Architectures for Named Entity Recognition

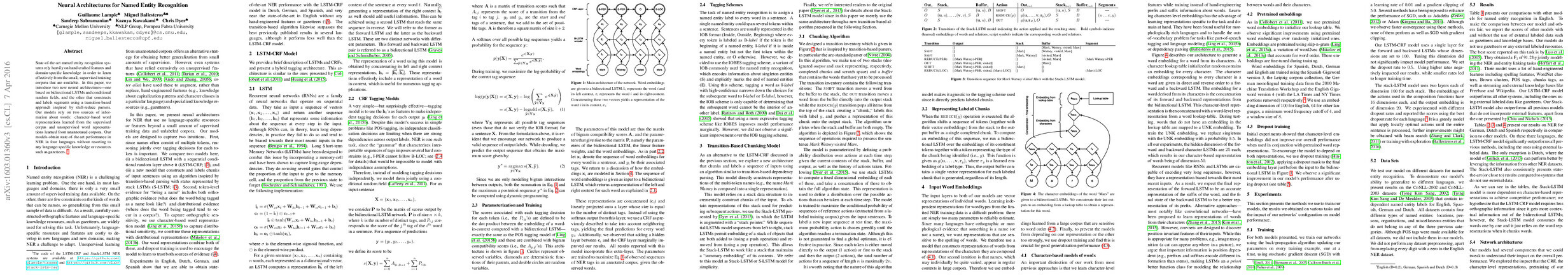

Abstract: State-of-the-art named entity recognition systems rely heavily on hand-crafted features and domain-specific knowledge in order to learn effectively from the small, supervised training corpora that are available. In this paper, we introduce two new neural architectures---one based on bidirectional LSTMs and conditional random fields, and the other that constructs and labels segments using a transition-based approach inspired by shift-reduce parsers. Our models rely on two sources of information about words: character-based word representations learned from the supervised corpus and unsupervised word representations learned from unannotated corpora. Our models obtain state-of-the-art performance in NER in four languages without resorting to any language-specific knowledge or resources such as gazetteers.

Synopsis

Overview

- Keywords: Named Entity Recognition, Neural Networks, LSTM, CRF, Transition-based Parsing

- Objective: Introduce novel neural architectures for Named Entity Recognition (NER) that do not rely on language-specific resources.

- Hypothesis: Can neural architectures effectively learn to identify named entities without extensive hand-crafted features or external resources?

- Innovation: Introduction of two neural models: a bidirectional LSTM-CRF and a transition-based Stack-LSTM, both leveraging character-based and distributional word representations.

Background

Preliminary Theories:

- Named Entity Recognition (NER): A subtask of information extraction that seeks to locate and classify named entities in text into predefined categories.

- Conditional Random Fields (CRF): A statistical modeling method often used for structured prediction, particularly in sequence labeling tasks like NER.

- Long Short-Term Memory (LSTM): A type of recurrent neural network (RNN) capable of learning long-term dependencies, useful for sequential data processing.

- Character-based Representations: Models that learn word representations from the characters that compose them, addressing issues of out-of-vocabulary words and morphological richness.

Prior Research:

- 2009: Ratinov and Roth compare various NER approaches, establishing benchmarks for future models.

- 2011: Collobert et al. propose a CNN-based model for NER, integrating character-level features.

- 2015: Huang et al. develop a bidirectional LSTM-CRF model, achieving significant performance improvements in NER tasks.

- 2015: Chiu and Nichols introduce character-aware neural networks for NER, further enhancing the use of character-level information.

Methodology

Key Ideas:

- Bidirectional LSTM-CRF: Combines LSTM's ability to capture context with CRF's modeling of label dependencies, enhancing sequential tagging.

- Stack-LSTM: A novel architecture that processes input sequences using a stack-based approach, inspired by transition-based parsing, allowing for dynamic chunking of named entities.

- Character-based and Distributional Representations: Models utilize both character-level embeddings and pre-trained word embeddings to capture morphological and contextual information.

- Dropout Training: Implemented to encourage the model to generalize better by preventing over-reliance on any single representation.

Experiments:

- Evaluated on CoNLL-2002 and CoNLL-2003 datasets across four languages (English, Dutch, German, Spanish).

- Metrics include F1 scores to assess model performance against state-of-the-art benchmarks.

Implications: The methodology demonstrates that effective NER can be achieved without extensive hand-crafted features or language-specific resources, making it adaptable to low-resource languages.

Findings

Outcomes:

- The LSTM-CRF model achieved state-of-the-art results in English, Dutch, German, and Spanish, outperforming previous models that relied on external resources.

- The Stack-LSTM model also demonstrated competitive performance, particularly in capturing multi-token named entities.

- Character-based representations significantly improved performance, especially in morphologically rich languages.

Significance: This research challenges the prevailing belief that extensive hand-crafted features are necessary for effective NER, showcasing the potential of neural architectures to learn from limited data.

Future Work: Suggested avenues include exploring more complex architectures, integrating unsupervised learning techniques, and expanding to additional languages and domains.

Potential Impact: Advancements in NER methodologies could facilitate broader applications in natural language processing, particularly in low-resource settings, enhancing automated information extraction across diverse languages and contexts.