Training Very Deep Networks

Abstract: Theoretical and empirical evidence indicates that the depth of neural networks is crucial for their success. However, training becomes more difficult as depth increases, and training of very deep networks remains an open problem. Here we introduce a new architecture designed to overcome this. Our so-called highway networks allow unimpeded information flow across many layers on information highways. They are inspired by Long Short-Term Memory recurrent networks and use adaptive gating units to regulate the information flow. Even with hundreds of layers, highway networks can be trained directly through simple gradient descent. This enables the study of extremely deep and efficient architectures.

Synopsis

Overview

- Keywords: Deep Learning, Highway Networks, Neural Networks, Stochastic Gradient Descent, Information Flow

- Objective: Introduce a new architecture called highway networks to facilitate the training of very deep neural networks.

- Hypothesis: Highway networks can be trained effectively using simple gradient descent, overcoming the challenges associated with depth in traditional networks.

Background

Preliminary Theories:

- Vanishing Gradients: A phenomenon where gradients become too small for effective training in deep networks, hindering learning.

- Long Short-Term Memory (LSTM): A type of recurrent neural network designed to capture long-range dependencies, inspiring the highway network architecture.

- Skip Connections: Techniques used in deep networks to allow gradients to flow through layers more effectively, improving training dynamics.

- Layer-wise Training: An approach where networks are trained one layer at a time to ease the optimization process.

Prior Research:

- 2012: Alex Krizhevsky et al. demonstrated significant improvements in image classification using deep convolutional networks.

- 2014: The introduction of very deep convolutional networks by Karen Simonyan and Andrew Zisserman, which set new benchmarks in image recognition.

- 2015: The development of FitNets, which proposed a two-stage training process to facilitate the training of deeper networks.

Methodology

Key Ideas:

- Highway Networks: Incorporate adaptive gating units that allow for unimpeded information flow across layers, inspired by LSTM architectures.

- Transform and Carry Gates: The architecture includes gates that determine how much information is transformed versus carried forward, enhancing flexibility.

- Stochastic Gradient Descent (SGD): Utilized for training highway networks, demonstrating effectiveness even at extreme depths.

Experiments:

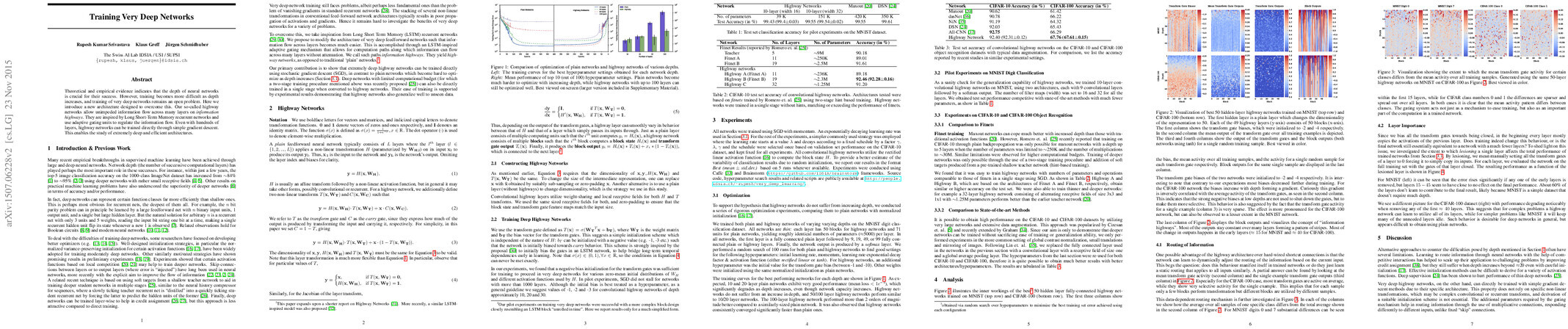

- MNIST Classification: Evaluated highway networks against plain networks of varying depths, showing superior performance in deeper configurations.

- CIFAR-10 and CIFAR-100: Conducted experiments to compare highway networks with state-of-the-art methods, demonstrating competitive accuracy with fewer parameters.

- Layer Importance Analysis: Investigated the contribution of individual layers to overall performance by lesioning layers and observing changes in accuracy.

Implications: The design of highway networks allows for deeper architectures to be trained effectively, providing insights into the necessary depth for various tasks.

Findings

Outcomes:

- Highway networks maintained performance levels comparable to shallower networks even at depths of 100 layers, significantly outperforming plain networks.

- Convergence rates for highway networks were faster than those for traditional networks, indicating better optimization dynamics.

- Layer importance varied by dataset complexity, with simpler datasets (like MNIST) showing less reliance on depth compared to more complex datasets (like CIFAR-100).

Significance: The findings challenge the prevailing belief that increasing depth inherently complicates training, showcasing a viable solution through highway networks.

Future Work: Further exploration of highway networks in various domains, including reinforcement learning and generative models, could yield additional insights.

Potential Impact: Advancements in training methodologies for deep networks could lead to breakthroughs in applications requiring complex architectures, such as natural language processing and computer vision.