Understanding deep learning requires rethinking generalization

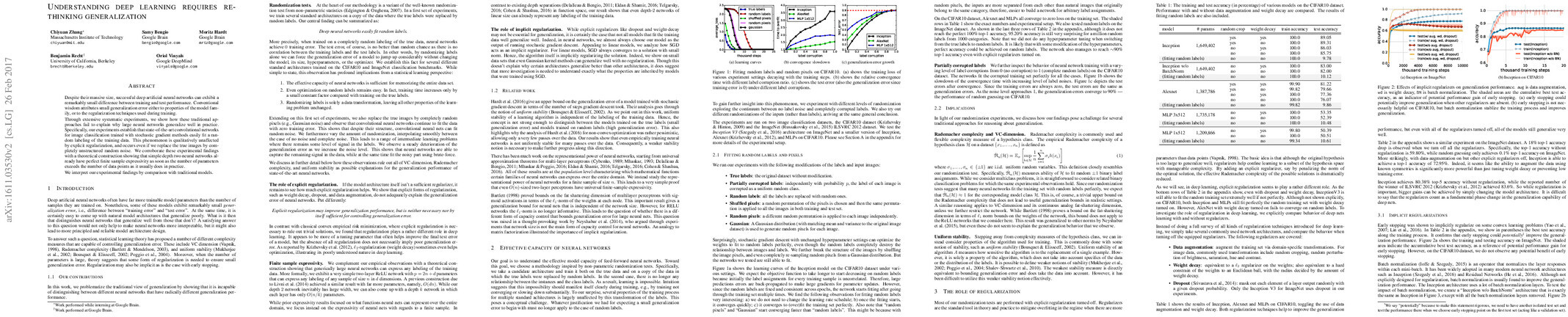

Abstract: Despite their massive size, successful deep artificial neural networks can exhibit a remarkably small difference between training and test performance. Conventional wisdom attributes small generalization error either to properties of the model family, or to the regularization techniques used during training. Through extensive systematic experiments, we show how these traditional approaches fail to explain why large neural networks generalize well in practice. Specifically, our experiments establish that state-of-the-art convolutional networks for image classification trained with stochastic gradient methods easily fit a random labeling of the training data. This phenomenon is qualitatively unaffected by explicit regularization, and occurs even if we replace the true images by completely unstructured random noise. We corroborate these experimental findings with a theoretical construction showing that simple depth two neural networks already have perfect finite sample expressivity as soon as the number of parameters exceeds the number of data points as it usually does in practice. We interpret our experimental findings by comparison with traditional models.

Synopsis

Overview

- Keywords: Deep Learning, Generalization, Neural Networks, Regularization, Randomization Tests

- Objective: Investigate the generalization capabilities of deep neural networks and challenge traditional theories of generalization.

- Hypothesis: Large neural networks can fit random labels and noise, questioning the adequacy of existing generalization theories.

Background

Preliminary Theories:

- VC Dimension: A measure of the capacity of a statistical classification algorithm, indicating how well a model can generalize to unseen data.

- Rademacher Complexity: A measure of the richness of a class of functions, used to derive generalization bounds.

- Uniform Stability: A property of learning algorithms that quantifies how sensitive the output is to changes in the training data.

- Implicit Regularization: The phenomenon where certain training algorithms (like SGD) lead to solutions that generalize well without explicit regularization.

Prior Research:

- 1998: VC dimension introduced as a fundamental concept in statistical learning theory.

- 2003: Rademacher complexity formalized, providing a flexible measure for generalization.

- 2002: Uniform stability proposed as a method to analyze generalization in learning algorithms.

- 2016: Studies on implicit regularization in SGD highlighted its role in achieving good generalization despite high model capacity.

Methodology

Key Ideas:

- Randomization Tests: Training neural networks on datasets with random labels to assess their fitting capabilities.

- Fitting Random Labels: Demonstrated that neural networks can achieve zero training error even when trained on completely random labels.

- Noise Variability: Experiments conducted by varying the level of noise in the input data to observe changes in generalization performance.

Experiments:

- Datasets: CIFAR10 and ImageNet used for testing various architectures (Inception, AlexNet, MLPs).

- Metrics: Training and test accuracy measured, with specific focus on generalization error as noise levels increased.

- Regularization Techniques: Evaluated the impact of explicit regularization methods (weight decay, dropout) on model performance.

Implications: Findings suggest that traditional complexity measures fail to explain the generalization performance of large neural networks, indicating a need for new theoretical frameworks.

Findings

Outcomes:

- Neural networks can perfectly fit random labels, achieving zero training error, but with high test error.

- Generalization error increases with the level of noise in the labels, confirming that networks can capture remaining signals in noisy data.

- Explicit regularization techniques do not fundamentally alter the generalization capabilities of neural networks.

Significance: Challenges the prevailing belief that regularization is essential for generalization, showing that neural networks can generalize well even without it.

Future Work: Further exploration of the properties of architectures that lead to better generalization, as well as the development of new theoretical measures that accurately reflect the effective capacity of neural networks.

Potential Impact: Advancements in understanding generalization could lead to more robust neural network designs and improved training methodologies, enhancing performance across various applications.