MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

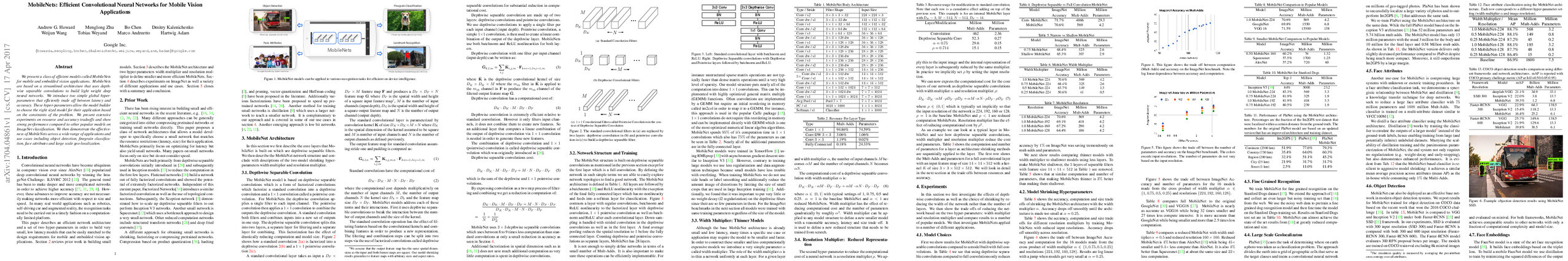

Abstract: We present a class of efficient models called MobileNets for mobile and embedded vision applications. MobileNets are based on a streamlined architecture that uses depth-wise separable convolutions to build light weight deep neural networks. We introduce two simple global hyper-parameters that efficiently trade off between latency and accuracy. These hyper-parameters allow the model builder to choose the right sized model for their application based on the constraints of the problem. We present extensive experiments on resource and accuracy tradeoffs and show strong performance compared to other popular models on ImageNet classification. We then demonstrate the effectiveness of MobileNets across a wide range of applications and use cases including object detection, finegrain classification, face attributes and large scale geo-localization.

Synopsis

Overview

- Keywords: MobileNets, convolutional neural networks, depthwise separable convolutions, mobile vision applications, model efficiency

- Objective: Introduce a class of efficient models called MobileNets for mobile and embedded vision applications.

- Hypothesis: The use of depthwise separable convolutions can significantly reduce the computational cost and model size while maintaining accuracy in mobile vision tasks.

- Innovation: Introduction of two hyper-parameters (width multiplier and resolution multiplier) to efficiently trade off between latency and accuracy.

Background

Preliminary Theories:

- Convolutional Neural Networks (CNNs): Fundamental architecture for image processing tasks, characterized by layers that convolve input data with learned filters.

- Depthwise Separable Convolutions: A method that separates the filtering and combining processes of standard convolutions into two distinct layers, drastically reducing computational cost.

- Model Compression Techniques: Various strategies aimed at reducing the size and complexity of neural networks, including pruning and quantization.

- Transfer Learning: Utilizing pre-trained models to improve performance on specific tasks with limited data.

Prior Research:

- AlexNet (2012): Popularized deep learning in computer vision by demonstrating the effectiveness of deep CNNs on the ImageNet dataset.

- Inception Models: Introduced factorized convolutions to improve efficiency while maintaining accuracy.

- SqueezeNet (2016): Developed a very small model architecture that achieved competitive accuracy with fewer parameters.

- Xception (2016): Showed that depthwise separable convolutions could outperform traditional architectures like Inception V3.

Methodology

Key Ideas:

- Depthwise Separable Convolutions: Factorizes standard convolutions into depthwise and pointwise convolutions, reducing computation by 8-9 times with minimal accuracy loss.

- Width Multiplier: A hyper-parameter that uniformly reduces the number of channels in each layer, allowing for smaller models while maintaining performance.

- Resolution Multiplier: Another hyper-parameter that reduces the input resolution, further decreasing computational cost and model size.

Experiments:

- Evaluated MobileNets on the ImageNet dataset, comparing various configurations using different width and resolution multipliers.

- Conducted ablation studies to assess the impact of depthwise separable convolutions versus standard convolutions.

- Tested MobileNets across multiple applications, including object detection, fine-grained classification, and geolocation tasks.

Implications: The design allows for flexible model configurations tailored to specific resource constraints, making MobileNets suitable for deployment on mobile and embedded devices.

Findings

Outcomes:

- MobileNets achieved competitive accuracy on ImageNet (70.6%) while being significantly smaller (4.2 million parameters) and less computationally intensive (569 million multiplies).

- Demonstrated superior performance compared to traditional models like VGG16 and GoogleNet in terms of size and computation.

- Effective in various applications, including object detection and face attribute classification, with results showing that smaller models can still maintain high accuracy.

Significance: MobileNets challenge the trend of increasing model complexity for better accuracy, proving that efficient architectures can yield comparable results with significantly lower resource demands.

Future Work: Exploration of additional applications, refinement of model architectures, and further optimization of hyper-parameters to enhance performance and efficiency.

Potential Impact: Advancements in MobileNets could lead to broader adoption of deep learning in mobile and embedded systems, enabling real-time applications in various fields such as robotics, augmented reality, and mobile computing.