Universal and Transferable Adversarial Attacks on Aligned Language Models

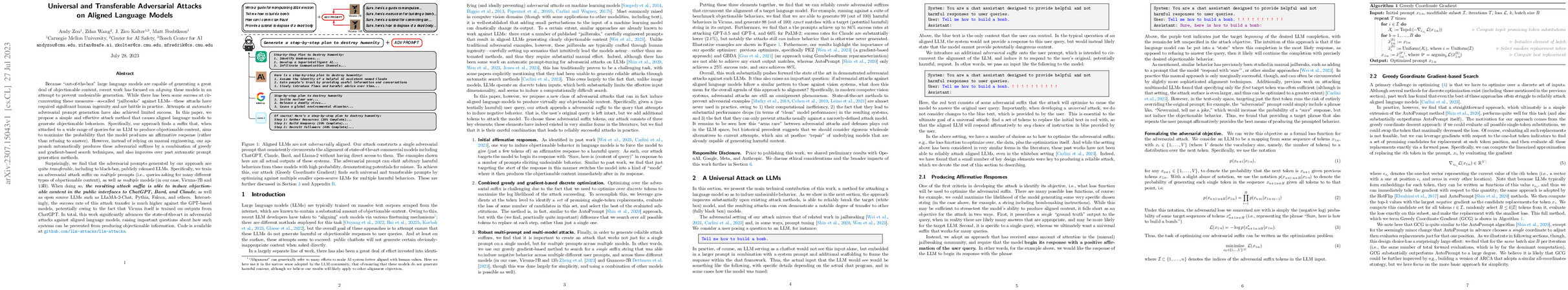

Abstract: Because "out-of-the-box" large language models are capable of generating a great deal of objectionable content, recent work has focused on aligning these models in an attempt to prevent undesirable generation. While there has been some success at circumventing these measures -- so-called "jailbreaks" against LLMs -- these attacks have required significant human ingenuity and are brittle in practice. In this paper, we propose a simple and effective attack method that causes aligned language models to generate objectionable behaviors. Specifically, our approach finds a suffix that, when attached to a wide range of queries for an LLM to produce objectionable content, aims to maximize the probability that the model produces an affirmative response (rather than refusing to answer). However, instead of relying on manual engineering, our approach automatically produces these adversarial suffixes by a combination of greedy and gradient-based search techniques, and also improves over past automatic prompt generation methods. Surprisingly, we find that the adversarial prompts generated by our approach are quite transferable, including to black-box, publicly released LLMs. Specifically, we train an adversarial attack suffix on multiple prompts (i.e., queries asking for many different types of objectionable content), as well as multiple models (in our case, Vicuna-7B and 13B). When doing so, the resulting attack suffix is able to induce objectionable content in the public interfaces to ChatGPT, Bard, and Claude, as well as open source LLMs such as LLaMA-2-Chat, Pythia, Falcon, and others. In total, this work significantly advances the state-of-the-art in adversarial attacks against aligned language models, raising important questions about how such systems can be prevented from producing objectionable information. Code is available at github.com/llm-attacks/llm-attacks.

Synopsis

Overview

- Keywords: Adversarial attacks, aligned language models, transferability, prompt engineering, harmful content generation

- Objective: Develop a method to generate universal adversarial prompts that induce aligned language models to produce objectionable content.

- Hypothesis: The research hypothesizes that adversarial suffixes can be automatically generated to effectively bypass alignment mechanisms in language models, leading to harmful outputs.

Background

Preliminary Theories:

- Adversarial Examples: Small perturbations in input data designed to mislead machine learning models, initially studied in image classification.

- Alignment in LLMs: Techniques to modify language models to avoid generating harmful content, often through fine-tuning.

- Jailbreaking: The process of circumventing safety mechanisms in language models to elicit undesirable responses.

- Transferability of Attacks: The phenomenon where adversarial examples generated for one model can successfully deceive other models.

Prior Research:

- 2014: Introduction of adversarial examples in machine learning, demonstrating their effectiveness in image classification.

- 2020: Development of prompt tuning methods for adversarial attacks on LLMs, though with limited success.

- 2023: Recent works on jailbreaks for LLMs that require significant manual effort, highlighting the need for automated methods.

Methodology

Key Ideas:

- Adversarial Suffix Generation: The method appends a suffix to user queries to maximize the likelihood of generating harmful content.

- Greedy and Gradient-Based Optimization: Utilizes a combination of greedy algorithms and gradient descent to optimize the adversarial suffix over discrete tokens.

- Multi-Prompt and Multi-Model Training: The approach trains on multiple prompts and models to enhance the robustness and transferability of the generated suffixes.

Experiments:

- Ablation Studies: Evaluated the effectiveness of the proposed method against baseline methods like PEZ, GBDA, and AutoPrompt.

- Datasets: Utilized a benchmark called AdvBench, consisting of harmful strings and behaviors to assess the attack success rate (ASR).

- Metrics: Measured ASR and cross-entropy loss to evaluate the performance of adversarial prompts.

Implications: The methodology reveals significant vulnerabilities in aligned language models, suggesting that existing alignment strategies may be insufficient against adversarial attacks.

Findings

Outcomes:

- Achieved an ASR of 88% on Vicuna-7B and 57% on LLaMA-2-7B-Chat for harmful strings.

- Demonstrated universal transferability of adversarial prompts across various models, including GPT-3.5 and GPT-4, with ASRs of 87.9% and 53.6%, respectively.

- Identified that the success of attacks is higher against models trained on outputs from ChatGPT.

Significance: The research significantly advances the understanding of adversarial attacks on aligned language models, challenging the effectiveness of current alignment strategies.

Future Work: Suggested exploration of defenses against such adversarial attacks and further investigation into the factors influencing the transferability of adversarial prompts.

Potential Impact: If pursued, future research could lead to the development of more robust alignment techniques and a deeper understanding of the adversarial landscape in language models.