Efficient Estimation of Word Representations in Vector Space

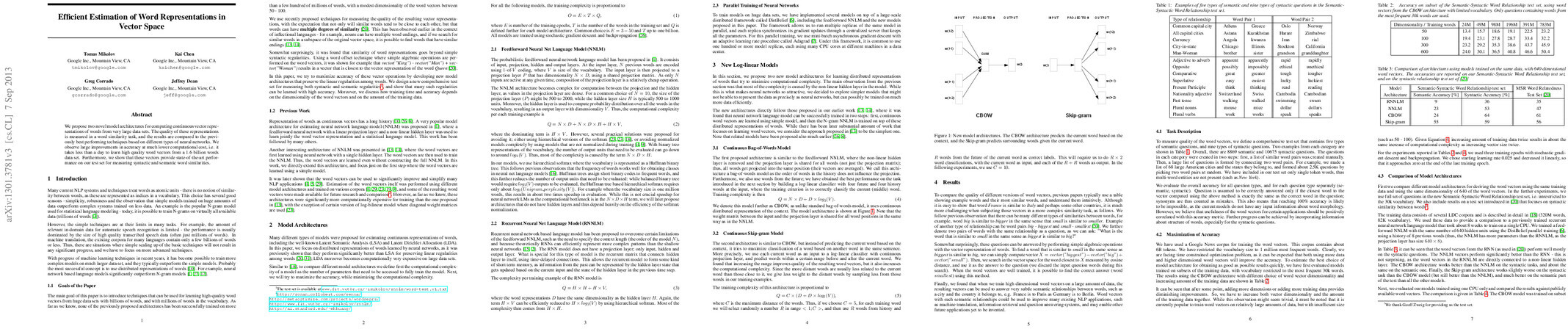

Abstract: We propose two novel model architectures for computing continuous vector representations of words from very large data sets. The quality of these representations is measured in a word similarity task, and the results are compared to the previously best performing techniques based on different types of neural networks. We observe large improvements in accuracy at much lower computational cost, i.e. it takes less than a day to learn high quality word vectors from a 1.6 billion words data set. Furthermore, we show that these vectors provide state-of-the-art performance on our test set for measuring syntactic and semantic word similarities.

Synopsis

Overview

- Keywords: Word representations, vector space, neural networks, continuous bag-of-words, skip-gram, computational efficiency

- Objective: Introduce novel model architectures for efficient computation of continuous vector representations of words from large datasets.

- Hypothesis: High-quality word vectors can be learned from vast datasets with reduced computational complexity compared to existing models.

- Innovation: Development of two new architectures (CBOW and Skip-gram) that significantly improve training speed and accuracy while maintaining the ability to capture semantic and syntactic relationships.

Background

Preliminary Theories:

- Distributed Representations: Concept that words can be represented as continuous vectors in a high-dimensional space, capturing semantic meanings.

- Neural Network Language Models (NNLM): Models that use neural networks to predict the probability of word sequences, improving upon traditional statistical methods.

- Hierarchical Softmax: A technique to reduce the computational complexity of output layers in neural networks by organizing vocabulary into a binary tree.

- Word Similarity and Relationships: Prior research has shown that word vectors can capture complex relationships, such as analogies (e.g., "king" - "man" + "woman" = "queen").

Prior Research:

- 2003: Introduction of NNLM by Bengio et al., demonstrating the potential of neural networks for language modeling.

- 2008: Collobert and Weston present a unified architecture for NLP tasks using deep learning.

- 2010: The introduction of recurrent neural networks (RNNs) for language modeling, allowing for more complex pattern recognition.

- 2011: Advances in training large-scale neural networks, enhancing the efficiency of language models.

Methodology

Key Ideas:

- Continuous Bag-of-Words (CBOW): Predicts the current word based on its context (surrounding words), using a shared projection layer to reduce complexity.

- Skip-gram Model: Predicts surrounding words given the current word, allowing for a more nuanced understanding of word relationships.

- Hierarchical Softmax: Utilizes a binary tree structure for vocabulary to decrease the number of computations needed during training.

Experiments:

- Evaluation of models on a comprehensive test set designed to measure both syntactic and semantic relationships among words.

- Training on large datasets (e.g., Google News corpus with 6 billion tokens) to assess the scalability and efficiency of the proposed architectures.

- Comparison of the performance of CBOW and Skip-gram models against traditional NNLMs and RNNs.

Implications: The methodology allows for the training of high-dimensional word vectors from massive datasets efficiently, enabling the capture of complex linguistic relationships.

Findings

Outcomes:

- The Skip-gram model outperformed previous architectures in capturing semantic relationships, achieving significant accuracy improvements on the test set.

- CBOW demonstrated competitive performance on syntactic tasks while being computationally efficient.

- Both models achieved high-quality word vectors within a fraction of the time required by traditional methods.

Significance: This research challenges the belief that complex models are necessary for high-quality word representations, showing that simpler architectures can achieve superior results with less computational overhead.

Future Work: Exploration of further optimizations in model architectures, integration of morphological information to enhance accuracy, and application of learned vectors in various NLP tasks.

Potential Impact: Advancements in word vector training techniques could lead to improved performance in machine translation, information retrieval, and other NLP applications, potentially reshaping the landscape of natural language processing.