Stacked Attention Networks for Image Question Answering

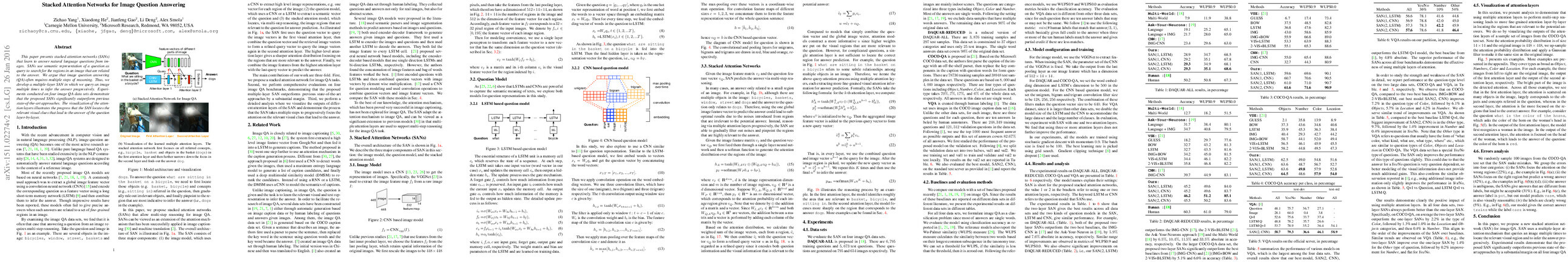

Abstract: This paper presents stacked attention networks (SANs) that learn to answer natural language questions from images. SANs use semantic representation of a question as query to search for the regions in an image that are related to the answer. We argue that image question answering (QA) often requires multiple steps of reasoning. Thus, we develop a multiple-layer SAN in which we query an image multiple times to infer the answer progressively. Experiments conducted on four image QA data sets demonstrate that the proposed SANs significantly outperform previous state-of-the-art approaches. The visualization of the attention layers illustrates the progress that the SAN locates the relevant visual clues that lead to the answer of the question layer-by-layer.

Synopsis

Overview

- Keywords: Image Question Answering, Stacked Attention Networks, Multi-step Reasoning, Neural Networks, Attention Mechanism

- Objective: Develop a stacked attention network (SAN) to improve image question answering by enabling multi-step reasoning.

- Hypothesis: Multi-layer attention mechanisms enhance the ability to focus on relevant image regions for answering questions about images.

Background

Preliminary Theories:

- Attention Mechanism: A technique in neural networks that allows models to focus on specific parts of the input data, improving performance in tasks like image captioning and translation.

- Convolutional Neural Networks (CNNs): Deep learning models particularly effective for image processing, used to extract features from images.

- Long Short-Term Memory (LSTM): A type of recurrent neural network (RNN) that excels in sequence prediction tasks, often used for processing text data.

- Multi-step Reasoning: The process of breaking down complex tasks into simpler steps, allowing models to progressively refine their focus on relevant information.

Prior Research:

- 2014: Introduction of attention mechanisms in image captioning, significantly improving the quality of generated captions.

- 2015: Development of various image QA models that utilized CNNs and LSTMs, laying the groundwork for combining visual and textual information.

- 2015: Creation of datasets like VQA and COCO-QA, which provided benchmarks for evaluating image question answering systems.

Methodology

Key Ideas:

- Stacked Attention Networks (SANs): A novel architecture that employs multiple attention layers to iteratively refine the focus on relevant image regions based on the question.

- Image and Question Models: Utilization of CNNs for image feature extraction and LSTMs or CNNs for question representation.

- Multi-layer Attention Mechanism: Each attention layer queries the image features based on the refined question vector from the previous layer, enhancing the specificity of the attention distribution.

Experiments:

- Evaluated on four datasets: DAQUAR-ALL, DAQUAR-REDUCED, COCO-QA, and VQA.

- Metrics included classification accuracy and Wu-Palmer similarity (WUPS) to assess the performance of the SAN against baseline models.

Implications: The design of SAN allows for a more nuanced understanding of the relationship between questions and image content, potentially leading to better performance in complex QA scenarios.

Findings

Outcomes:

- SANs significantly outperformed previous state-of-the-art models across all evaluated datasets, demonstrating the effectiveness of multi-layer attention.

- Visualization of attention layers showed a progressive focus on relevant image regions, confirming the multi-step reasoning capability of SANs.

- Error analysis indicated that most mistakes were due to focusing on incorrect regions or ambiguous answers, suggesting areas for further refinement.

Significance: The research establishes a new benchmark in image question answering, highlighting the advantages of multi-layer attention mechanisms over traditional single-layer approaches.

Future Work: Exploration of additional layers in SANs, integration with other neural architectures, and application to more diverse datasets could enhance the robustness and applicability of the model.

Potential Impact: Advancements in image question answering could lead to improvements in applications such as autonomous systems, educational tools, and enhanced user interaction with visual content.