Convolutional Neural Networks for Sentence Classification

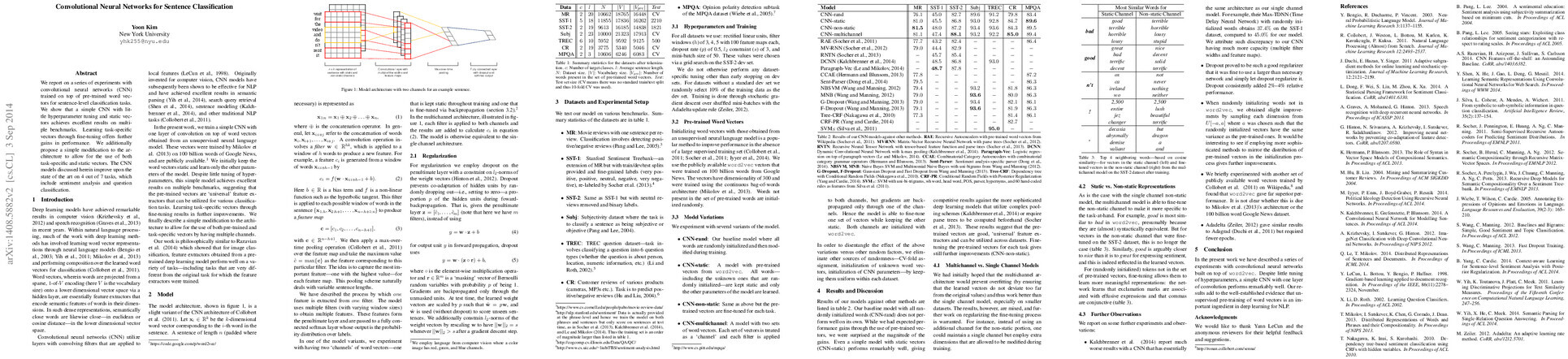

Abstract: We report on a series of experiments with convolutional neural networks (CNN) trained on top of pre-trained word vectors for sentence-level classification tasks. We show that a simple CNN with little hyperparameter tuning and static vectors achieves excellent results on multiple benchmarks. Learning task-specific vectors through fine-tuning offers further gains in performance. We additionally propose a simple modification to the architecture to allow for the use of both task-specific and static vectors. The CNN models discussed herein improve upon the state of the art on 4 out of 7 tasks, which include sentiment analysis and question classification.

Synopsis

Overview

- Keywords: Convolutional Neural Networks, Sentence Classification, Word Vectors, NLP, Fine-tuning

- Objective: Investigate the effectiveness of convolutional neural networks (CNNs) for sentence classification tasks using pre-trained word vectors.

- Hypothesis: CNNs can outperform traditional models in sentence classification by leveraging pre-trained word vectors and fine-tuning them for specific tasks.

- Innovation: Introduction of a multichannel architecture that combines static and task-specific word vectors, enhancing performance across various classification tasks.

Background

Preliminary Theories:

- Word Vectors: Dense representations of words that capture semantic meanings, allowing for effective feature extraction in NLP tasks.

- Convolutional Neural Networks (CNNs): Originally designed for image processing, CNNs have been adapted for NLP, utilizing local features for classification.

- Transfer Learning: The concept of using pre-trained models or vectors to improve performance on related tasks, reducing the need for extensive labeled data.

Prior Research:

- 2011: Collobert et al. introduced deep learning methods for NLP, demonstrating significant improvements in various tasks using neural networks.

- 2013: Mikolov et al. developed word2vec, a method for generating word embeddings that capture semantic relationships, widely adopted in NLP.

- 2014: Kalchbrenner et al. presented a CNN model for sentence classification, achieving state-of-the-art results by utilizing complex pooling mechanisms.

Methodology

Key Ideas:

- CNN Architecture: Utilizes one convolutional layer with multiple filter widths to capture various n-gram features from sentences.

- Multichannel Approach: Combines static word vectors (pre-trained) with non-static vectors (fine-tuned during training) to enhance model adaptability.

- Pooling Mechanism: Implements max-over-time pooling to extract the most salient features from the convolutional output, accommodating variable sentence lengths.

Experiments:

- Evaluated on multiple datasets including MR (Movie Reviews), SST-1, SST-2 (Stanford Sentiment Treebank), and others.

- Models compared include CNN-rand (random initialization), CNN-static (static vectors), CNN-non-static (fine-tuned vectors), and CNN-multichannel (combined vectors).

- Metrics used for evaluation include accuracy and F1 scores across different classification tasks.

Implications: The methodology emphasizes the importance of pre-trained vectors and fine-tuning in achieving high performance in NLP tasks, suggesting that even simple CNN architectures can yield competitive results.

Findings

Outcomes:

- CNNs with static word vectors achieved competitive results, indicating the effectiveness of pre-trained embeddings as universal feature extractors.

- Fine-tuning the pre-trained vectors resulted in improved performance, particularly in sentiment analysis tasks.

- The multichannel model showed mixed results compared to single-channel models, indicating the need for further exploration in regularization techniques.

Significance: This research demonstrates that CNNs can effectively leverage pre-trained word vectors, outperforming traditional models in several benchmarks and contributing to the growing body of evidence supporting deep learning in NLP.

Future Work: Suggested avenues include refining the multichannel architecture, exploring alternative regularization methods, and testing with additional datasets to further validate findings.

Potential Impact: Advancements in CNN architectures for sentence classification could lead to more robust NLP applications, enhancing capabilities in sentiment analysis, question classification, and beyond.