Learning to learn by gradient descent by gradient descent

Abstract: The move from hand-designed features to learned features in machine learning has been wildly successful. In spite of this, optimization algorithms are still designed by hand. In this paper we show how the design of an optimization algorithm can be cast as a learning problem, allowing the algorithm to learn to exploit structure in the problems of interest in an automatic way. Our learned algorithms, implemented by LSTMs, outperform generic, hand-designed competitors on the tasks for which they are trained, and also generalize well to new tasks with similar structure. We demonstrate this on a number of tasks, including simple convex problems, training neural networks, and styling images with neural art.

Synopsis

Overview

- Keywords: Meta-learning, optimization algorithms, LSTM, gradient descent, neural networks

- Objective: To propose a method for learning optimization algorithms using recurrent neural networks, specifically LSTMs, to outperform traditional hand-designed optimizers.

- Hypothesis: Learning to optimize can yield better performance than conventional optimization methods by adapting to the structure of specific problems.

- Innovation: Introduction of a learned optimizer that utilizes LSTMs to dynamically adjust updates based on historical gradient information, enabling superior performance across various tasks.

Background

Preliminary Theories:

- Gradient Descent: A fundamental optimization technique that updates parameters based on the gradient of the loss function, but often limited by its reliance on first-order information.

- Meta-learning: The concept of learning how to learn, where algorithms are designed to adapt their learning strategies based on previous experiences or tasks.

- Long Short-Term Memory (LSTM): A type of recurrent neural network architecture capable of learning long-term dependencies, making it suitable for tasks requiring memory of past states.

- Transfer Learning: The ability of a model to generalize knowledge from one task to another, crucial for the proposed optimizers to perform well on unseen problems.

Prior Research:

- 1990s: Early explorations into learning algorithms, including work by Bengio on learning rules for neural networks.

- 2016: Recent advancements in meta-learning frameworks, notably by Lake et al. and Santoro et al., emphasizing the importance of generalization across tasks.

- 2016: The introduction of neural Turing machines, which inspired the architecture of the proposed LSTM optimizers.

Methodology

Key Ideas:

- LSTM Optimizer: The core innovation is the use of LSTMs to learn the update rules for optimization, allowing the optimizer to adapt based on the history of gradients.

- Coordinatewise Updates: The optimizer operates on each parameter independently, which simplifies the architecture and allows for efficient training.

- Dynamic Learning: The optimizer's updates are informed by past gradients, integrating concepts of momentum and adaptive learning rates.

Experiments:

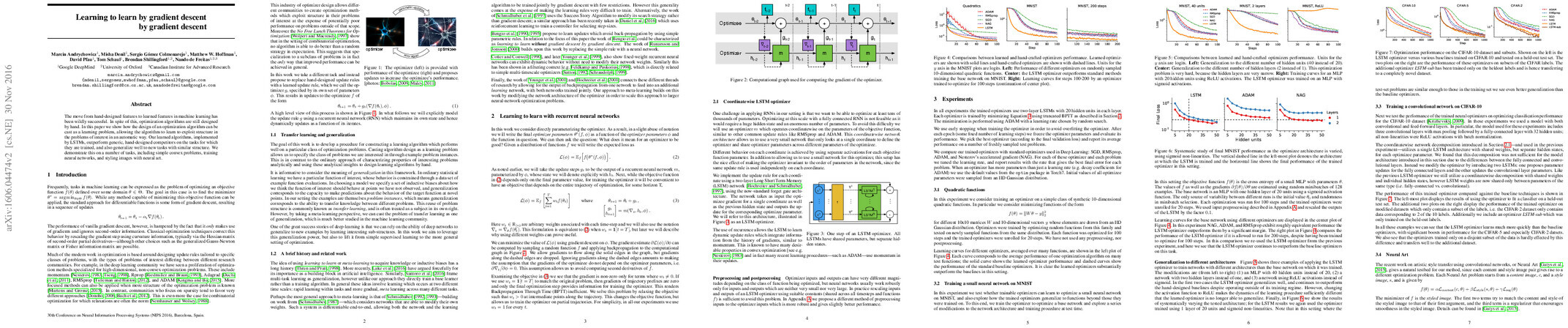

- Quadratic Functions: Evaluated the optimizer on synthetic 10-dimensional quadratic functions, demonstrating superior performance compared to traditional optimizers like SGD and ADAM.

- MNIST Dataset: Tested the optimizer on training a small neural network, showcasing its ability to generalize across different architectures and training conditions.

- CIFAR-10 Classification: Assessed the optimizer's performance on a convolutional network, revealing its effectiveness in handling more complex tasks.

- Neural Art: Applied the optimizer to artistic style transfer tasks, illustrating its adaptability to diverse optimization problems.

Implications: The design of the optimizer allows for the potential to learn from a variety of tasks, improving efficiency and effectiveness in training neural networks.

Findings

Outcomes:

- The LSTM optimizer consistently outperformed traditional optimizers across various tasks, demonstrating its ability to adapt and generalize.

- Generalization was particularly strong when the tasks shared similar structures, confirming the hypothesis of effective transfer learning.

- The optimizer exhibited dynamic behavior, adjusting its updates based on the history of gradients, which contributed to its superior performance.

Significance: This research challenges the traditional reliance on hand-designed optimization algorithms, suggesting that learned optimizers can provide significant advantages in performance and adaptability.

Future Work: Further exploration into more complex architectures, additional datasets, and refining the optimizer's learning mechanisms could enhance its capabilities.

Potential Impact: Advancements in learned optimization could lead to more efficient training of deep learning models, enabling faster convergence and improved performance across a wider range of applications.