Least Squares Generative Adversarial Networks

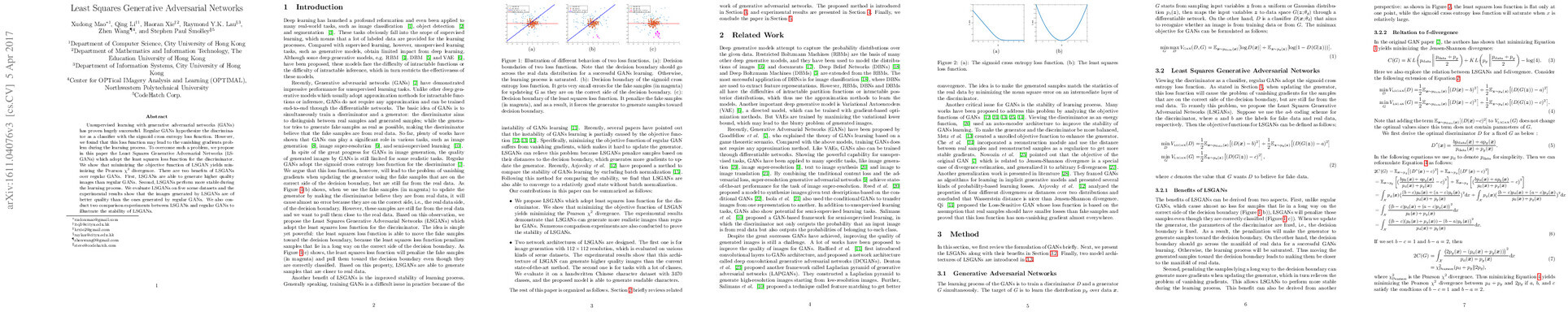

Abstract: Unsupervised learning with generative adversarial networks (GANs) has proven hugely successful. Regular GANs hypothesize the discriminator as a classifier with the sigmoid cross entropy loss function. However, we found that this loss function may lead to the vanishing gradients problem during the learning process. To overcome such a problem, we propose in this paper the Least Squares Generative Adversarial Networks (LSGANs) which adopt the least squares loss function for the discriminator. We show that minimizing the objective function of LSGAN yields minimizing the Pearson $\chi^2$ divergence. There are two benefits of LSGANs over regular GANs. First, LSGANs are able to generate higher quality images than regular GANs. Second, LSGANs perform more stable during the learning process. We evaluate LSGANs on five scene datasets and the experimental results show that the images generated by LSGANs are of better quality than the ones generated by regular GANs. We also conduct two comparison experiments between LSGANs and regular GANs to illustrate the stability of LSGANs.

Synopsis

Overview

- Keywords: Generative Adversarial Networks, Least Squares, Image Generation, Unsupervised Learning, Stability

- Objective: Introduce Least Squares Generative Adversarial Networks (LSGANs) to improve image generation quality and training stability.

- Hypothesis: The use of a least squares loss function for the discriminator in GANs can alleviate the vanishing gradient problem and enhance the quality of generated images.

- Innovation: LSGANs replace the traditional sigmoid cross-entropy loss with a least squares loss, leading to better image quality and more stable training dynamics.

Background

Preliminary Theories:

- Generative Adversarial Networks (GANs): A framework where two neural networks, a generator and a discriminator, are trained simultaneously to generate realistic data.

- Vanishing Gradients: A common issue in neural networks where gradients become too small for effective learning, particularly in deep networks.

- Pearson χ2 Divergence: A statistical measure used to quantify the difference between two probability distributions, relevant in the context of GANs for evaluating generated data quality.

- Least Squares Loss: A loss function that minimizes the square of the difference between predicted and actual values, offering advantages in stability and convergence.

Prior Research:

- 2014: Introduction of GANs by Goodfellow et al., demonstrating their effectiveness in generating realistic data.

- 2016: Development of Deep Convolutional GANs (DCGANs), enhancing GAN architectures for better image generation.

- 2016: Introduction of Wasserstein GANs, addressing stability issues in GAN training by using a different distance metric.

- 2016: Feature matching techniques proposed to improve convergence in GANs by aligning statistics of generated and real data.

Methodology

Key Ideas:

- Discriminator Loss Function: LSGANs utilize a least squares loss function, which penalizes samples based on their distance from the decision boundary, even if they are correctly classified.

- Generator Update Mechanism: The generator is updated based on the feedback from the discriminator, which is fixed during the update, ensuring more informative gradients.

- Model Architecture: Two architectures are proposed; one for general image generation and another for generating characters from a large class set.

Experiments:

- Datasets: Evaluated on LSUN scene datasets and a handwritten Chinese character dataset (HWDB1.0).

- Metrics: Image quality assessed through visual inspection and comparison with images generated by traditional GANs.

- Stability Comparison: Conducted experiments to compare the stability of LSGANs against regular GANs under various training conditions.

Implications: The design of LSGANs allows for more robust training processes, reducing the likelihood of mode collapse and improving the quality of generated outputs.

Findings

Outcomes:

- LSGANs consistently produce higher quality images compared to regular GANs across multiple datasets.

- The stability of the training process is significantly improved, with LSGANs demonstrating less sensitivity to hyperparameter settings.

- LSGANs effectively mitigate the vanishing gradient problem, allowing for more effective updates to the generator.

Significance: LSGANs challenge the conventional wisdom regarding loss functions in GANs, providing a viable alternative that enhances both image quality and training stability.

Future Work: Suggested directions include extending LSGANs to more complex datasets, such as ImageNet, and exploring methods to directly pull generated samples toward real data distributions.

Potential Impact: Advancements in LSGANs could lead to significant improvements in various applications of generative models, including data augmentation, image synthesis, and semi-supervised learning.