Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks

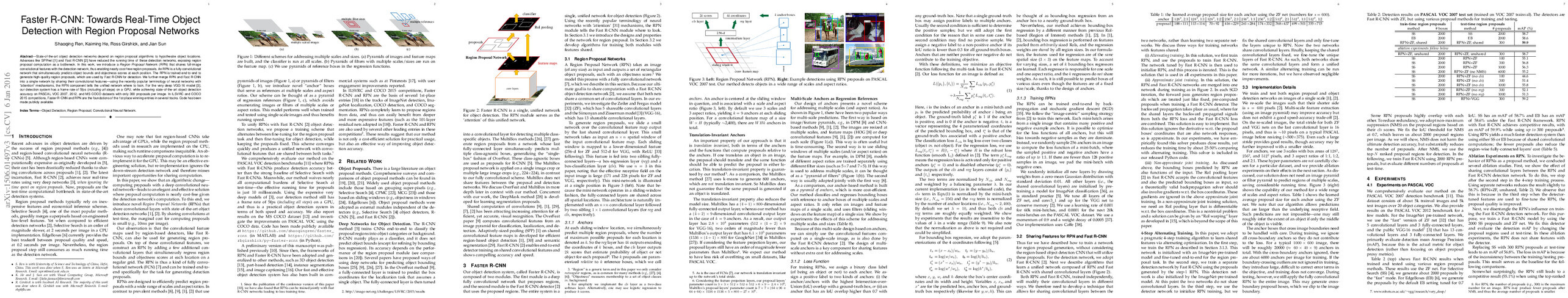

Abstract: State-of-the-art object detection networks depend on region proposal algorithms to hypothesize object locations. Advances like SPPnet and Fast R-CNN have reduced the running time of these detection networks, exposing region proposal computation as a bottleneck. In this work, we introduce a Region Proposal Network (RPN) that shares full-image convolutional features with the detection network, thus enabling nearly cost-free region proposals. An RPN is a fully convolutional network that simultaneously predicts object bounds and objectness scores at each position. The RPN is trained end-to-end to generate high-quality region proposals, which are used by Fast R-CNN for detection. We further merge RPN and Fast R-CNN into a single network by sharing their convolutional features---using the recently popular terminology of neural networks with 'attention' mechanisms, the RPN component tells the unified network where to look. For the very deep VGG-16 model, our detection system has a frame rate of 5fps (including all steps) on a GPU, while achieving state-of-the-art object detection accuracy on PASCAL VOC 2007, 2012, and MS COCO datasets with only 300 proposals per image. In ILSVRC and COCO 2015 competitions, Faster R-CNN and RPN are the foundations of the 1st-place winning entries in several tracks. Code has been made publicly available.

Synopsis

Overview

- Keywords: Object Detection, Region Proposal Networks, Convolutional Neural Networks, Faster R-CNN, Real-Time Detection

- Objective: Introduce a unified framework for object detection that integrates region proposal networks to enhance speed and accuracy.

- Hypothesis: The integration of a Region Proposal Network (RPN) with Fast R-CNN will significantly reduce the computational burden of region proposals while maintaining or improving detection accuracy.

Background

Preliminary Theories:

- Region-Based CNNs (R-CNN): A foundational method for object detection that uses selective search for region proposals, leading to high computational costs.

- Fast R-CNN: An improvement over R-CNN that shares convolutional features across proposals, significantly reducing detection time but still reliant on external region proposal methods.

- Selective Search: A widely used region proposal method that merges superpixels based on low-level features, known for its computational inefficiency.

- Fully Convolutional Networks (FCNs): Networks designed to take inputs of arbitrary sizes and produce outputs of corresponding sizes, allowing for end-to-end training.

Prior Research:

- 2014: Introduction of SPPnet, which allowed for spatial pyramid pooling in CNNs, improving speed in object detection.

- 2015: Fast R-CNN proposed, which optimized the R-CNN framework by sharing convolutional layers, achieving real-time performance.

- 2015: Introduction of EdgeBoxes, providing a faster alternative to selective search for generating object proposals.

Methodology

Key Ideas:

- Region Proposal Network (RPN): A fully convolutional network that predicts object bounds and objectness scores, sharing convolutional features with the detection network.

- Anchor Boxes: Introduced to handle multiple scales and aspect ratios, allowing the RPN to generate proposals efficiently without needing to process multiple image scales.

- End-to-End Training: The RPN and Fast R-CNN are trained jointly, enhancing the quality of proposals through shared feature learning.

Experiments:

- Evaluated on the PASCAL VOC 2007 and 2012 datasets, comparing RPN with traditional methods like Selective Search and EdgeBoxes.

- Metrics included mean Average Precision (mAP) for detection accuracy, with a focus on reducing the number of proposals while maintaining performance.

Implications: The design allows for a significant reduction in computational time for generating proposals, making real-time object detection feasible.

Findings

Outcomes:

- Faster R-CNN achieved a frame rate of 5 fps on a GPU while maintaining state-of-the-art accuracy on benchmark datasets.

- The RPN reduced the proposal generation time to approximately 10 milliseconds, compared to 2 seconds for Selective Search.

- The system demonstrated improved mAP scores compared to traditional methods, indicating better proposal quality and detection accuracy.

Significance: This research established a new standard for object detection systems by integrating proposal generation into the detection pipeline, contrasting with previous methods that treated these as separate processes.

Future Work: Potential improvements could include exploring deeper networks, refining anchor box strategies, and applying the framework to other tasks such as instance segmentation.

Potential Impact: Advancements in real-time object detection could significantly enhance applications in autonomous driving, surveillance, and robotics, leading to broader adoption of deep learning techniques in practical scenarios.